OnPath Testing was contacted in early Jan 2017 to provide the Software Quality Assurance testing for this project as an outside vendor, to complement the work being done by the development team that had started several months earlier. The devs understood the importance of good QA process, and OnPath started with the mandate to provide solid manual testing results. As we shall see however, we realized many additional needs over time and provided a far broader scope of quality activities for this project which has resulted in broad user acceptance and overall project success.

ClearCaptions is a US-based technology company with a focus on developing products to improve the lives of individuals with hearing loss. The ClearCaptions Blue phone is a groundbreaking desktop telephone that includes a screen which displays captions in real-time as users have a conversation. Additional features such as a higher decibel output and support for a wide range of assisted listening devices round out the feature set of this phone, and provide for an ease of use that is rare in the world of the hearing impaired.

The Blue Phone

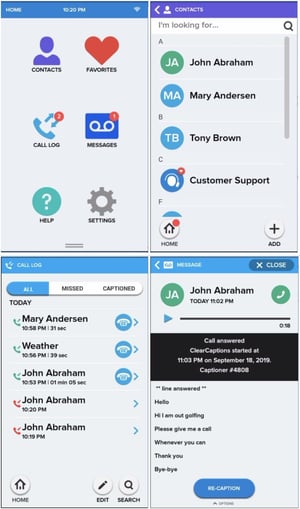

The Blue phone is essentially a mobile application built on top of the stock Android OS (currently under development for iOS as well), housed within a desktop phone device which includes typical physical features such as a handset, keypad, speakerphone, and connection ports for 2.5mm and 3.5mm headset devices. The primary interface for the app is a 7” color touchscreen where the user can manage typical mobile phone features such as a contact list, call history, and settings, and the rest of the physical phone works no differently than your typical desktop device. The phone is physically connected via a standard telephone cable (using an RJ-11 port) to either the older Plain Old Telephone Service (POTS) or more typically a router-based telephone service from a telecommunications provider such as AT&T, Verizon, Comcast, or others

Background

The QA team was brought in after several months of development had begun, which was perhaps not ideal but was also not a huge barrier. Based on the output of the 4-6 full time developers we deemed an initial QA team of 3 manual test engineers to be sufficient, using the common rule of thumb estimate of 1 QA resource per 2-3 development resources. Over time, the devs grew in number with QA following suite, up to 5 manual testers plus a QA Manager.

The QA team was brought in after several months of development had begun, which was perhaps not ideal but was also not a huge barrier. Based on the output of the 4-6 full time developers we deemed an initial QA team of 3 manual test engineers to be sufficient, using the common rule of thumb estimate of 1 QA resource per 2-3 development resources. Over time, the devs grew in number with QA following suite, up to 5 manual testers plus a QA Manager.

Although automation was a consideration (and explored to some degree later on in the project), our focus was always on the manual effort given the unusual nature of project – only a live person could determine so many of the dynamic aspects of this behavior of this application when in use.

- When the phone is on-hook, no dial tone should be heard on either speakerphone or headset.

- When the user lifts the handset or hits the speakerphone button a dial tone should be heard within a reasonable timeframe (a few milliseconds).

- The first press of the keypad button should break dial tone (ie. falls silent).

- Each keypad number should produce the appropriate audio tone (aka DTMF tone).

- After every keypad press any significant pause (>2 sec) should automatically initiate the call.

- Etc, etc.

As you can see, the interaction between the software of the Blue application along with the hardware of the physical device, all operating within the larger context of the public switched telephone network (PSTN) created a unique, dynamic, and challenging system to manage from the software QA perspective. And of course, there were many other pieces of the overall architecture to consider such as API calls, internal agent stations that connected to an active call and provided the captions, and internal systems that fed and consumed data to/from the backend for activities such as new line provisioning and freeing up resources after the end of a call.

Project Goals

The aim of this project was to provide immediate quality procedures, activities, and results for the developers and internal client stakeholders to be sure this application met usability and functional milestones. As the OnPath Testing engineers become integrated into the dev team and the work progressed through the sprints however, it became clear our scope need broadening:

- Requirements and Change Management were not being considered as changes occurred during the sprint cycles.

- Internal tools for proper testing (eg. data loading the device, rolling back to previous releases, managing test data, etc) were either non-existent, insufficient, or breaking.

- As the product moved into alpha and beta release, cross-team communications (such as with sales and support) were breaking down and inefficient.

- Overall management of new features and bug tickets from sprint to sprint was unclear and caused confusion.

The release timeline did get pushed several times but the product did successfully get released into alpha in May 2018, beta in Oct 2018, and a pilot program by the end of that same year. These were all considered aggressive milestones at the time, but between the dedicated work of both development and QA we achieved these goals.

Methodology/Work Undertaken

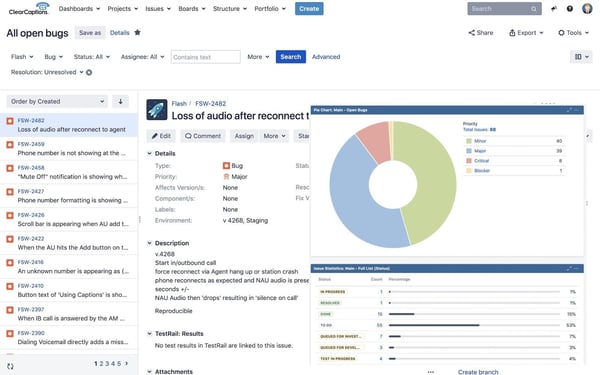

Development had already established a 2-week sprint cycle, where QA would receive a new build every Fri by EOD. This has proven to be a fine framework for the dev/test cycle and we’ve stayed with this structure. Dev and product management had already chosen JIRA/Confluence as the primary tools which was also our preferred toolset, and at first we chose a simple spreadsheet to manage our test development and test cases/ test plans. These tools provided the immediate need of test and defect management, along with metrics reporting for regular status reports. Over time we customized JIRA heavily:

- Individual fields were modified, for example adding specific values for Resolution States, Priorities, and other fields

- Ticket workflow was customized to move tickets from QA to development and back to QA with respect to our releases

- Reporting dashboards automated our status communications. For example we created specific views for various teams: the dev team received information on the latest bugs and the current QA cycle; product management and stakeholders had their dashboard with overall product health and the ticket repository; and support/sales had a view into the current bugs within production, other known issues, and timeline for fixes.

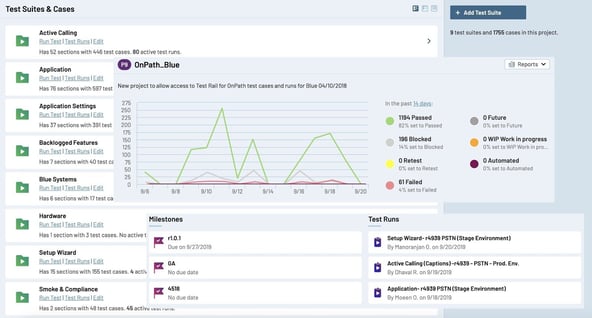

Google Docs and Sheets had initially proven sufficient for our test planning but as our test suites grew into the thousands of test cases we needed a new solution, and turned to TestRail by Gurock as a provider. Another team in ClearCaptions had already adopted this tool so it made sense to standardize on existing knowledge, and it has proven to be a good choice for both test management and status reporting, offering many options for customization along with a solid feature set. We set up milestones within this tool for each sprint release, and have a dozen or so test plans from the initial Smoke & Compliance to each major feature and test calling, and the ability to gain immediate visibility into our current execution efforts and results.

Challenges/ Obstacles Overcome

One of the continuing major challenges in this project are the recurring regression issues that appear sprint to sprint. Functionality that was confirmed working in one sprint in broken in the next, even when the devs were not working on issues in that particular feature or area of the app, and it would confound both QA and devs alike.

In order to manage this recurring application behavior while reaching the ongoing goal of sprint quality assurance we approached this challenge with a multi-pronged effort of test prioritization and resource management:

- The Smoke/Compliance Test Plan was paramount and run immediately after every push from dev to QA – if any single test failed in this plan then QA would fail the sprint.

- While the smoke plan was being run by one or two engineers, another engineer would start executing the specific tests that were impacted by the tickets fixed or implemented in the sprint.

- With 2-3 days remaining in the sprint all efforts would turn towards regression, focusing on a variety of high priority, targeted test cases as well as scenario-based testing that covered a broader swath of functionality. Time permitting, testers would move to medium and lower priority, and we would always allow a few hours by the end of the sprint for adhoc and exploratory tests.

Results

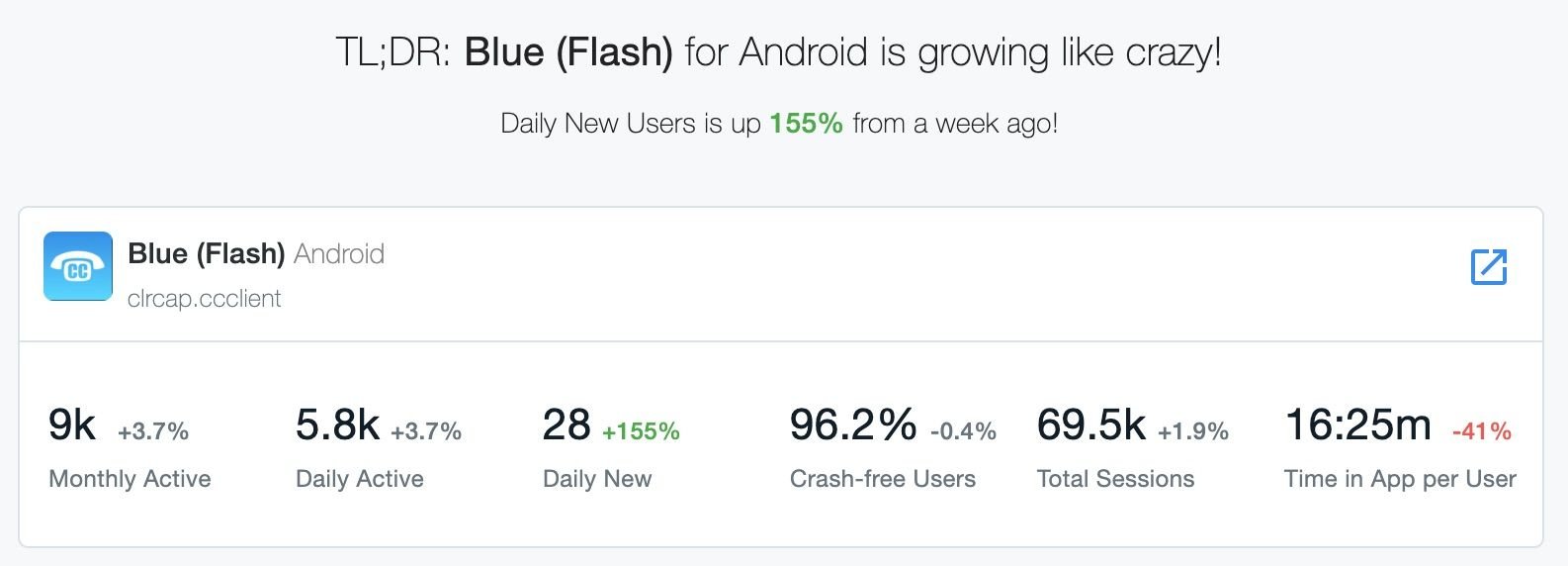

With the assistance of OnPath Testing engineers, the ClearCaptions Blue phone has successfully reached nearly 10,000 users with 70k total sessions in less than 10mo after launch – and although this may not sound impressive, keep in mind the relatively small user base of our target audience, and this becomes a significant number. Another excellent indicator of our success is the growth rate of 155% week over week.

As seen previously, we have nearly 2k test cases spread across 9 test suites, and these test cases have generated several thousand bug reports that are fed into automated dashboard reporting to all major stakeholders within the company.

Evaluation

The ClearCaptions Blue application and desktop phone was built as a cutting-edge replacement to a previous model and become the flagship product of the ClearCaptions offering to the hearing impaired community. We have succeeded in this goal, despite our challenges along the way, and are currently improving the set of functionality with a VOIP service offering, a standalone Android app download, and also a port to iOS.

Looking back from a Quality Assurance perspective, the biggest improvement I would make in hindsight would be to migrate off the spreadsheet-based tool to a more feature-rich and thoroughly professional test management tool quicker. With the test execution complexities involved of this product between the software, hardware, and the phone network we needed to track our test planning and execution results very carefully, which demanded a better tool than a spreadsheet.

Success was determined by meeting the release dates and milestones for alpha, beta, and pilot, which the dev and QA team managed in each instance. There were occasional delays, but they were communicated to all relevant team members and managed accordingly, with eventual successful releases. Success was furthermore achieve in a more empirical sense by the goodwill across the different teams once the product went live, and the bumps in communications smoothed out once proper processes and data flow were put in place.